It looks like a glass, which rises sharply upwards. If we look at what we got, we can see that we have a 3D surface. Which will lead us to the following equation: If we multiply both sides of the equation by n we get: Let’s see an example, let’s take all the y values, and divide them by n since it’s the mean, and call it y(HeadLine). Let’s define, for each one, a new character which will represent the mean of all the squared values. We will take all the y, and (-2ymx) and etc, and we will put them all side-by-side.Īt this point we’re starting to be messy, so let’s take the mean of all squared values for y, xy, x, x². We will take each part and put it together. I colored the difference between the equations to make it easier to understand. Let’s begin by opening all the brackets in the equation. Let’s rewrite this expression to simplify it. Let’s take each point on the graph, and we’ll do our calculation (y-y’)².īut what is y’, and how do we calculate it? We do not have it as part of the data.īut we do know that, in order to calculate y’, we need to use our line equation, y=mx+b, and put the x in the equation. You can skip to the next part if you want.Īs you know, the line equation is y=mx+b, where m is the slope and b is the y-intercept. This part is for people who want to understand how we got to the mathematical equations.

Our goal is to minimize this mean, which will provide us with the best line that goes through all the points.

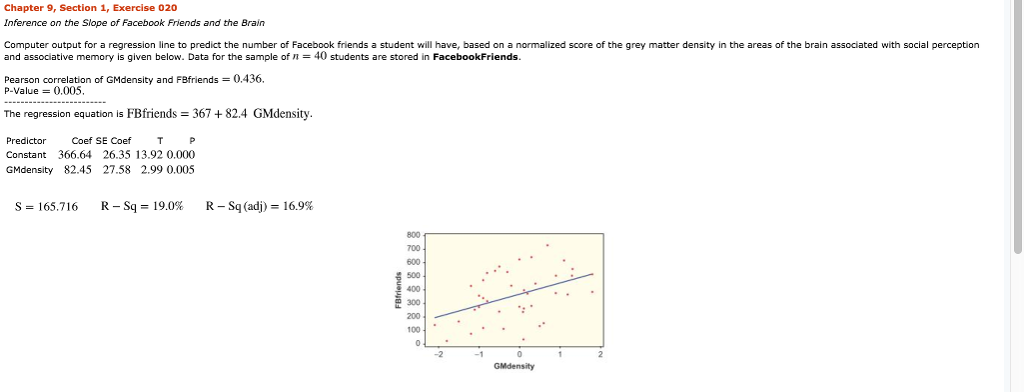

The y’ point sits on the line we created. For each point, we take the y-coordinate of the point, and the y’-coordinate.Let’s imagine this like an array of points, where we go through all the points, from the first (i=1) to the last (i=n). It is the sum of a sequence of numbers, from i=1 to n. In mathematics, the character that looks like weird E is called summation (Greek sigma).Let’s analyze what this equation actually means. Let’s define a mathematical equation that will give us the mean squared error for all our points. We want to find M ( slope) and B ( y-intercept) that minimizes the squared error! You should remember this equation from your school days, y=Mx+B, where M is the slope of the line and B is y-intercept of the line. Each error is the distance from the point to its predicted point. The red line between each purple point and the prediction line are the errors.This is a line that passes through all the points and fits them in the best way. Each point has an x-coordinate and a y-coordinate. the purple dots are the points on the graph.You might be asking yourself, what is this graph? Of course, my drawing isn’t the best, but it’s just for demonstration purposes. I will take an example and I will draw a line between the points. Let’s say we have seven points, and our goal is to find a line that minimizes the squared distances to these different points. An explanation of the mathematical formulae we received and the role of each variable in the formula.This section is for those who want to understand how we get the mathematical formulas later, you can skip it if that doesn’t interest you. The mathematical part which contains algebraic manipulations and a derivative of two-variable functions for finding a minimum.Get a feel for the idea, graph visualization, mean squared error equation.The fact that MSE is almost always strictly positive (and not zero) is because of randomness or because the estimator does not account for information that could produce a more accurate estimate. MSE is a risk function, corresponding to the expected value of the squared error loss. This is the definition from Wikipedia: In statistics, the mean squared error (MSE) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors - that is, the average squared difference between the estimated values and what is estimated. We will define a mathematical function that will give us the straight line that passes best between all points on the Cartesian axis.Īnd in this way, we will learn the connection between these two methods, and how the result of their connection looks together. The example consists of points on the Cartesian axis. This article will deal with the statistical method mean squared error, and I’ll describe the relationship of this method to the regression line. By Moshe Binieli Machine learning: an introduction to mean squared error and regression lines Introduction image Introduction

0 kommentar(er)

0 kommentar(er)